Serena Williams won her third consecutive US Open tennis title a few days ago, thanks to obvious reasons like physical strength and endurance. But how much did her brain and its egocentric and allocentric functions help the American tennis star retain the cup?

Quite significantly, say York University neuroscience researchers. Their recent study shows that different regions of the brain help to visually locate objects relative to one’s own body (self-centred or egocentric) and those relative to external visual landmarks (world-centred or allocentric).

“The current study shows how the brain encodes allocentric and egocentric space in different ways during activities that involve manual aiming,” explains Distinguished Research Professor Doug Crawford of the Department of Psychology, Faculty of Health. “Take tennis for example. Allocentric brain areas could help aim the ball toward the opponent’s weak side of play, whereas the egocentric areas would make sure your muscles return the serve in the right direction.”

The study finding will help health-care providers to develop therapeutic treatments for patients with brain damage in these two areas, according to the neuroscientists at York’s Centre for Vision Research.

“As a neurologist, I am excited by the finding because it provides clues for doctors and therapists on how they might design different therapeutic approaches,” says Ying Chen, lead researcher and a PhD candidate in the School of Kinesiology and Health Science.

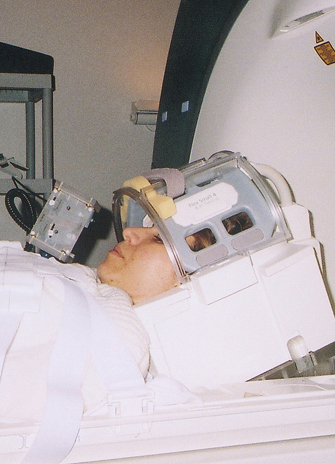

The study, Allocentric versus Egocentric Representation of Remembered Reach Targets in Human Cortex, published in the Journal of Neuroscience, was conducted using the state-of-the-art fMRI scanner at York University’s Sherman Health Science Research Centre. A dozen participants were tested using the scanner, which Chen modified to distinguish brain areas related to these two functions.

The participants were given three different tasks to complete when viewing remembered visual targets: egocentric reach (remembering absolute target location), allocentric reach (remembering target location relative to a visual landmark) and a non-spatial control, colour report (reporting colour of target).

When participants remembered egocentric targets’ locations, areas in the upper occipital lobe (at the back of the brain) encoded visual direction. In contrast, lower areas of the occipital and temporal lobes encoded object direction relative to other visual landmarks. In both cases, the parietal and frontal cortex (near the top of the brain) coded reach direction during the movement.